Model Evaluation#

Evaluation is the process of measuring the performance of a model. It’s a crucial step in the development of a model. There are various ways to evaluate a model, we will discuss some of the most common ones.

K-Fold Cross Validation#

Testing accuracy for just once doesn’t account for the variance in the data and might give misleading results. K-Fold validation randomly selects one of \(k\) parts of the data set then tests the accuracy on the same. After required number of iterations, the accuracy is averaged

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

df = pd.read_csv('Social_Network_Ads.csv')

X = df.iloc[:, 2:4] # Using 1:2 as indices will give us np array of dim (10, 1)

y = df.iloc[:, 4]

df.head()

| User ID | Gender | Age | EstimatedSalary | Purchased | |

|---|---|---|---|---|---|

| 0 | 15624510 | Male | 19 | 19000 | 0 |

| 1 | 15810944 | Male | 35 | 20000 | 0 |

| 2 | 15668575 | Female | 26 | 43000 | 0 |

| 3 | 15603246 | Female | 27 | 57000 | 0 |

| 4 | 15804002 | Male | 19 | 76000 | 0 |

# Scale

from sklearn.preprocessing import StandardScaler

X_sca = StandardScaler()

X = X_sca.fit_transform(X)

from __future__ import division

from sklearn.model_selection import KFold

from sklearn.metrics import accuracy_score

from sklearn.svm import SVC

kfold_cv = KFold(n_splits=10)

correct = 0

total = 0

for train_indices, test_indices in kfold_cv.split(X):

X_train, X_test, y_train, y_test = X[train_indices], X[test_indices], \

y[train_indices], y[test_indices]

clf = SVC(kernel='linear', random_state=0).fit(X_train, y_train)

correct += accuracy_score(y_test, clf.predict(X_test))

total += 1

print("Accuracy: {0:.2f}".format(correct/total))

Accuracy: 0.82

from sklearn.svm import SVC #support vector classifier

clf = SVC(kernel='linear', random_state=0).fit(X_train, y_train)

# applying k-fold cross validation

from sklearn.model_selection import cross_val_score

accuracies = cross_val_score(clf, X_train, y_train, cv=10)

print(accuracies)

print(accuracies.mean())

print(accuracies.std())

[0.72222222 0.69444444 0.94444444 0.94444444 0.97222222 0.94444444

0.83333333 0.75 0.80555556 0.91666667]

0.8527777777777776

0.0994196120454071

Leave one out cross validation#

Another type of cross validation is leave one out cross validation. Out of the \(n\) samples, one of them is left out and the model is trained on other samples. When K in KFold validation is equal to the number of samples then K-Fold validation is same as leave one out cross validation

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

df = pd.read_csv('Social_Network_Ads.csv')

X = df.iloc[:, 2:4] # Using 1:2 as indices will give us np array of dim (10, 1)

y = df.iloc[:, 4]

df.head()

| User ID | Gender | Age | EstimatedSalary | Purchased | |

|---|---|---|---|---|---|

| 0 | 15624510 | Male | 19 | 19000 | 0 |

| 1 | 15810944 | Male | 35 | 20000 | 0 |

| 2 | 15668575 | Female | 26 | 43000 | 0 |

| 3 | 15603246 | Female | 27 | 57000 | 0 |

| 4 | 15804002 | Male | 19 | 76000 | 0 |

# Scale

from sklearn.preprocessing import StandardScaler

X_sca = StandardScaler()

X = X_sca.fit_transform(X)

from __future__ import division

from sklearn.model_selection import LeaveOneOut

from sklearn.metrics import accuracy_score

from sklearn.svm import SVC

loo_cv = LeaveOneOut()

correct = 0

total = 0

for train_indices, test_indices in loo_cv.split(X):

# uncomment these lines to print splits

# print("Train Indices: {}...".format(train_indices[:4]))

# print("Test Indices: {}...".format(test_indices[:4]))

# print("Training SVC model using this configuration")

X_train, X_test, y_train, y_test = X[train_indices], X[test_indices], \

y[train_indices], y[test_indices]

clf = SVC(kernel='linear', random_state=0).fit(X_train, y_train)

correct += accuracy_score(y_test, clf.predict(X_test))

total += 1

print("Accuracy: {0:.2f}".format(correct/total))

Accuracy: 0.84

Stratified KFold#

Kfold validation does not preserve the split of the output variable while splitting the data in k-folds.

Imagine training a Naive Bayes classifier using KFold validation using 10 samples where 5 are positive and 5 are negative. Since KFold randomly selects the split imagine splitting it in an unfortunate way – 1 split contains all positive samples and 1 contains all negative.

Naive Bayes classifier will calculate the prior probabilities and find it to be 100% i.e. the model will think the output is always positive which is obviously wrong.

To tackle this scenario we use Stratified split, what it would essentially do is preserve the split in the original dataset in training set, that is, if the original dataset has 50% positive and 50% negative outputs then the training set will also have 50% positive and 50% negative outputs.

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

df = pd.read_csv('Social_Network_Ads.csv')

X = df.iloc[:, 2:4] # Using 1:2 as indices will give us np array of dim (10, 1)

y = df.iloc[:, 4]

df.head()

| User ID | Gender | Age | EstimatedSalary | Purchased | |

|---|---|---|---|---|---|

| 0 | 15624510 | Male | 19 | 19000 | 0 |

| 1 | 15810944 | Male | 35 | 20000 | 0 |

| 2 | 15668575 | Female | 26 | 43000 | 0 |

| 3 | 15603246 | Female | 27 | 57000 | 0 |

| 4 | 15804002 | Male | 19 | 76000 | 0 |

# Scale

from sklearn.preprocessing import StandardScaler

X_sca = StandardScaler()

X = X_sca.fit_transform(X)

Validating Time Series data#

Time series data is data associated with a time frame, for instance stock prices.

The motivation is to predict stock price for future given the data from previous data. If we were to use any splitting techniques from above we would end up predicting past from future (due to random nature from splitting) which shouldn’t be permitted, we should always predict future from past. This can be achieved using TimeSeriesSplit

from sklearn.model_selection import TimeSeriesSplit

import numpy as np

X = np.random.rand(10, 2)

y = np.random.rand(10)

print(X)

print(y)

[[0.88375519 0.70839149]

[0.76029107 0.86953049]

[0.88220755 0.70728456]

[0.2416478 0.35790595]

[0.56243882 0.62992346]

[0.2962591 0.62643959]

[0.35616697 0.06525364]

[0.67784325 0.46470706]

[0.76170259 0.4194236 ]

[0.37069252 0.39007223]]

[0.56404909 0.23175207 0.68194322 0.47164875 0.18481909 0.76661588

0.40355237 0.31316771 0.20042839 0.57326238]

Confusion Matrix#

Confusion matrix is used only on classification tasks. It describes the following matrix

predicted true |

predicted false |

|

|---|---|---|

actual true |

True Positive |

False Negative |

actual false |

False Positive |

True Negative |

Accuracy#

Precision (Positive Predicted Value)#

Intuitively, what precision states is out of the number of times your model predicts true, how many times is it correct?

This metric penalizes heavily for False Positives.

This metric should be considered when its OK to have some false negatives but not false positives.

Imagine if your model is predicting the conclusion of a jurisdiction. Its OK to leave a criminal free, rather than punishing an innocent one.

Recall (Sensitivity)#

Intuitively, what recall states is out of the times the output is true, how many times are you correct?

This metric penalizes heavily for False Negatives.

This metric should be considered when its OK to have some false positives but not false negatives.

F1 Score#

F1 score is the harmonic mean of precision and recall.

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.metrics import confusion_matrix

X, y = make_classification()

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, stratify=y)

clf = SVC().fit(X_train, y_train)

confusion_matrix(y_test, clf.predict(X_test))

array([[9, 1],

[1, 9]])

predicted true |

predicted false |

|

|---|---|---|

actual true |

10 |

0 |

actual false |

1 |

9 |

Grid Search#

Grid Search is used for hyperparameter optimization. It allows you to specify range of values (for hyperparameters) to try on and in the end select the one with the highest cv accuracy.

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

df = pd.read_csv('Social_Network_Ads.csv')

X = df.iloc[:, 2:4] # Using 1:2 as indices will give us np array of dim (10, 1)

y = df.iloc[:, 4]

df.head()

| User ID | Gender | Age | EstimatedSalary | Purchased | |

|---|---|---|---|---|---|

| 0 | 15624510 | Male | 19 | 19000 | 0 |

| 1 | 15810944 | Male | 35 | 20000 | 0 |

| 2 | 15668575 | Female | 26 | 43000 | 0 |

| 3 | 15603246 | Female | 27 | 57000 | 0 |

| 4 | 15804002 | Male | 19 | 76000 | 0 |

# Split in training and testing

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25)

# Scale

from sklearn.preprocessing import StandardScaler

X_sca = StandardScaler()

X_train = X_sca.fit_transform(X_train)

X_test = X_sca.transform(X_test)

from sklearn.svm import SVC #support vector classifier

clf = SVC(kernel='linear', random_state=0).fit(X_train, y_train)

# Grid search

from sklearn.model_selection import GridSearchCV

# insert parameters that you want to optimize

parameters = [

{

'C': [1, 10, 100, 1000],

'kernel': ['linear']

},

{

'C': [1, 10, 100, 1000],

'kernel': ['rbf'],

'gamma': [0.5, 0.1, 0.001, 0.0001],

}

]

grid_search = GridSearchCV(estimator=clf, param_grid=parameters, scoring='accuracy', cv=10, n_jobs=-1)

grid_search = grid_search.fit(X_train, y_train)

print(grid_search.best_estimator_)

print(grid_search.best_score_)

print(grid_search.best_params_)

SVC(C=1, gamma=0.5, random_state=0)

0.9133333333333334

{'C': 1, 'gamma': 0.5, 'kernel': 'rbf'}

Now we have the SVC with highest accuracy

Shortcomings of GridSearch#

While GridSearch works well, it is inefficient since it tries all the combinations which is infeasible.

Alternate approach is RandomizedSearch which will randomly select values and try them out for few iterations and in the end we select the best one.

Why would this work?#

Well, let’s say there are 10x10 = 100 possible cominations, and the best model lies in some random 5% range.

What is the probability that a randomly selected point will lie in that 5% range? Well, it’s 5% but what if we try \(n\) number of times? It will be \(5*n\%\) so if we try 10 times, we have \(50\%\) chance of landing on the sweet spot.

What would happen if we’d use GridSearch? Let’s say the sweet spot lies between 95-100. We would have to try 95 times before reaching that spot, as opposed to RandomizedSear where we can try \(10\) times with \(50%\) confidence.

df = pd.read_csv('Social_Network_Ads.csv')

X = df.iloc[:, 2:4] # Using 1:2 as indices will give us np array of dim (10, 1)

y = df.iloc[:, 4]

df.head()

| User ID | Gender | Age | EstimatedSalary | Purchased | |

|---|---|---|---|---|---|

| 0 | 15624510 | Male | 19 | 19000 | 0 |

| 1 | 15810944 | Male | 35 | 20000 | 0 |

| 2 | 15668575 | Female | 26 | 43000 | 0 |

| 3 | 15603246 | Female | 27 | 57000 | 0 |

| 4 | 15804002 | Male | 19 | 76000 | 0 |

# Split in training and testing

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25)

# Scale

from sklearn.preprocessing import StandardScaler

X_sca = StandardScaler()

X_train = X_sca.fit_transform(X_train)

X_test = X_sca.transform(X_test)

from sklearn.svm import SVC #support vector classifier

clf = SVC(kernel='linear', random_state=0).fit(X_train, y_train)

from sklearn.model_selection import RandomizedSearchCV

parameters = {

'C': range(1, 11),

'kernel': ['linear']

}

random_search = RandomizedSearchCV(estimator=clf, param_distributions=parameters, scoring='accuracy', cv=10, n_jobs=-1)

random_search = random_search.fit(X_train, y_train)

\(R^2\) Intuition#

For a given model, the sum of squared errors is calculated as $\( SS_{res} = \sum_{i=0}^n (y_i - \hat{y_i})^2 \)$

For a model where output is always the average value of \(y\) is $\( SS_{tot} = \sum_{i=0}^n (y_i - y_{avg})^2 \)$

R Squared is defined as $\( R^2 = 1 - \frac{SS_{res}}{SS_{tot}} \)$

So the \(R^2\) basically depicts how different your model is from average model, if your model is equal to average model, the \(R^2\) is 0 which is bad, but if it is accurate one, the \(SS_{res}\) will be lower and \(\frac{SS_{res}}{SS_{tot}}\) will be lower, which means the \(R^2\) will be higher for an accurate model

Note that \(R^2\) can also be negative. This occurs when your model is even worse than the average model

Adjusted \(R^2\)#

Problem with \(R^2\)#

Hypothesis: \(R^2\) will never decrease

When you have a model with \(n\) variables, the model will try to minimise the error. When you add \(n + 1\) th variable, the model will try to minimise the error by assigning it a valid coefficient. If it fails to do so, i.e. if the new variable isn’t helping at all, it will simply assign it a coefficient of 0. Hence, \(R^2\) will never decrease.

So, the problem is we will never know if the model is getting better by adding additional variables, which is an important thing to know.

So, the solution is to use adjusted \(R^2\) which is given by

Where, p = number of Regressors (independent variables) n = sample size

So basically, it penalizes for the number of variables you use. It is a battle between increase in \(R^2\) vs the penalization brought by adding the additional variable

Interpreting coefficients#

Just because the coefficient of a variable is high, it doesn’t mean it is more corelated. We should look at the units while interpreting coefficient. Best way to do it is look at the change for a unit change. For instance, if the coefficient is 0.79 we can say, for a unit change i.e. for an additional dollar added into the column, the profit will increase by 79 cents

Receiver Operating Characteristic (ROC) Curve#

ROC curve is a commonly used tool to evaluate binary classifier. It is used to compare different models. ROC curve has False Positive Rate or X-axis and True Positive Rate on Y-axis. Where,

from __future__ import division

import numpy as np

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import confusion_matrix

X, y = make_classification(n_samples=1000)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, stratify=y)

clf = LogisticRegression().fit(X_train, y_train)

y_pred = clf.predict_proba(X_test)

import matplotlib

font = {'family' : 'normal',

'size' : 15}

matplotlib.rc('font', **font)

import matplotlib.pyplot as plt

from sklearn.preprocessing import Binarizer

from sklearn.metrics import auc, roc_curve

fig = plt.figure(figsize=(12, 8))

values = []

ax1 = fig.add_subplot(111)

ax2 = ax1.twiny()

def plot_roc(y_test, clf):

y_pred = clf.predict_proba(X_test)[:, 1]

fpr, tpr, ths = roc_curve(y_test, y_pred)

auc_val = auc(fpr, tpr)

ax1.plot(fpr, tpr, color="red", label="AUC = {0:.2f}".format(auc_val))

ax1.set_xlabel("False Positive Rate")

ax1.set_ylabel("True Positive Rate")

plt.title("ROC Curve\n")

ax1.set_xlim((-0.01, 1.01))

ax1.set_ylim((-0.01, 1.01))

ax1.set_xticks(np.linspace(0, 1, 11))

ax1.plot([0, 1], [0, 1], color="blue")

ax1.legend(loc="lower right")

plt.show()

plot_roc(y_test, clf)

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

Setting optimum threshold#

Most of the time we are inclined towards setting the threshold of \(0.5\) for classification tasks, but can we do better?

Using various thresholds we can output the accuracy and select the threshold with highest accuracy.

Silhoutte Distance#

Silhoutte Distance is used to study the separation between clusters. It can be used to evaluate and select the number of clusters. The formula is given by:

Where,

\(a(i)\) = average distance of point \(i\) with other members of same cluster.

\(b(i)\) = lowest of average distance of point \(i\) with member of clusters other than the one it is currently present in.

The value of \(s(i)\) ranges from:

The value will be close to 1 when \(b(i) >> a(i)\). That is when the nearest neighbor of point \(i\) is very far & the members of clusters that \(i\) is a part of aren’t very far apart. This is the case when the clustering has worked well.

The opposite case is when the value is close to -1. This happens when \(a(i) >> b(i)\). That is when the the average distance of point within cluster is more than that of the neighboring one, then this indicates that the current point would do better if it is assigned to the neighboring one.

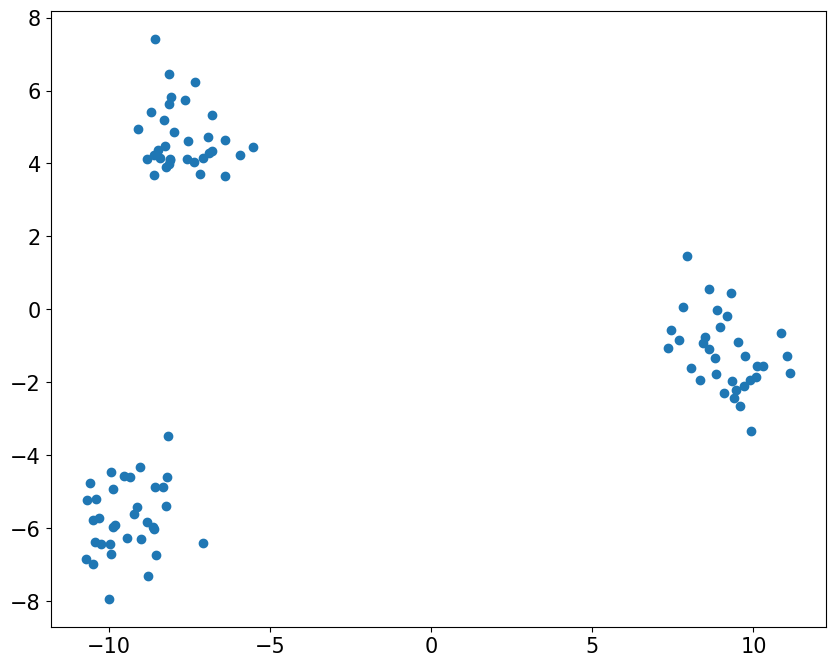

from sklearn.datasets import make_blobs

# create fake dataset

X, y = make_blobs(100, centers=3)

# Visualize data

import matplotlib.pyplot as plt

fig = plt.figure(figsize=(10, 8))

plt.scatter([x[0] for x in X], [x[1] for x in X])

plt.show()

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

# create classifier

import numpy as np

from sklearn.cluster import KMeans

clf = KMeans(n_clusters=3)

y_pred = clf.fit_predict(X)

# visualize output

from sklearn.metrics import silhouette_score

fig = plt.figure(figsize=(10, 8))

for label in np.unique(y_pred):

X_label = X[y==label]

plt.scatter([x[0] for x in X_label], [x[1] for x in X_label], label=label)

score = silhouette_score(X, y_pred)

plt.legend()

plt.title("KMeans prediction. Silhoutte score: {0:.2f}".format(score))

plt.xlabel("Dimension 1")

plt.ylabel("Dimension 2")

plt.show()

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

# visualize silhoutte sample scores

from sklearn.metrics import silhouette_samples

fig = plt.figure(figsize=(19, 8))

sample_score = silhouette_samples(X, y_pred)

plt.hist(sample_score)

plt.title("Silhoutte sample score".format(score))

plt.xlabel("Dimension 1")

plt.ylabel("Dimension 2")

plt.show()

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

findfont: Font family 'normal' not found.

Since most scores are close to 1, we conclude that the model is performing very good.